Concurrency

Concurrency and parallelism

Concurrent computing allows multiple tasks within an application to run in no particular order, out of sequence. In other words: one task does not need to be completed for the next one to begin. This is a property of the algorithm. In other words, we need to design our application to allow it.

Concurrent computing does not imply that execution occurs at the same instant of time. Parallel computing does refer to execution at the same instant of time, and takes advantage of systems with multiple cores to speed up computation. It is a property of the machine.

On the one hand, there are situations where applications are inherently concurrent. On the other hand, if we do not design concurrently, our applications cannot take advantage of the multi-core hardware architectures of computer CPUs, and we will be limited to the capacity and performance of a single core.

Processes and threads

The planner or scheduler of a system is the mechanism that allows assigning tasks to workers. In an application, a task is usually translated into a thread and a worker into a CPU core. The assignment causes the scheduler to replace one task with another. This is called context switching. It is heavy for processes, with a larger context, and light for threads, with a smaller context.

The basic unit of execution of an operating system is the process, which are collections of code, memory, data, and other resources. A process has an independent execution environment, simulating a computer. For example, it has its own independent memory space. They are usually synonymous with program, or application, although it can be a set of processes.

A thread is a sequence of code that runs within the scope of the process, and can share data with other threads. A thread is the minimum sequence of instructions that a scheduler manages. A process can have multiple threads running concurrently within it.

When we develop an application, its data is in two different memory spaces: the heap and the call stack:

-

Call stack: data structure that stores the active routines of a thread stacked in frames. The frame is created when it is called, and it is deleted when the routine ends. Each frame contains:

- The return address

- The parameters of the routine

- Local variables

-

Heap: Dynamic space of memory that is allocated when data is created and deallocated when it is deleted. Usually here we find the objects.

Concurrency application

Programming allows us to implement concurrency in various ways depending on the language and development context. Here are possible use cases:

- On a UI, perform operations in a separate worker that does not block the interface.

- Implement alarms and timers.

- Implementation of parallel algorithms.

- Implement tasks from multiple concurrent clients, accessing shared resources.

Concurrency models

A task can be characterized, according to its type of activity, as:

- CPU-bound: It is a task that needs the CPU to perform intensive calculations.

- I/O-bound (Input/Output): This is a task that is usually waiting for an input/output operation, such as reading or writing to disk or the network.

Scheduler task assignment can be of two types: cooperative or preemptive.

- Cooperative: tasks manage their life cycle, and decide when to leave the worker.

- Preemptive: The scheduler allocates a time slice for the task, and takes it from the worker when it is fulfilled.

The main challenge to implement concurrency is the correct coordination between tasks and the access to shared resources in a safe way. These are some of the available approaches:

- Single Threaded: we only have one thread that is shared between all tasks.

- Shared State (or shared memory): Two or more tasks share a state that they can all read and write to, and various mechanisms allow this to be done safely.

- Message Passing: Nothing is shared. Instead, messages are exchanged.

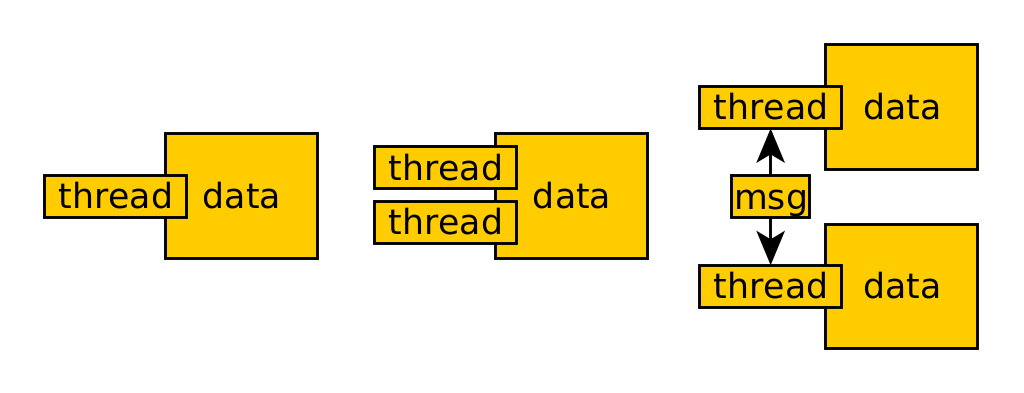

The following diagram shows the single-threaded, shared-memory, and message-passing models.

Single Threaded

This option simplifies concurrency design, as there is no need to use mechanisms to manage simultaneous access to shared resources. It has the disadvantage that tasks cannot be parallelized, but this is only a problem if they are CPU-bound.

A common example is the UI event loop. It is implemented with a queue that receives events and handles them quickly, since they only perform asynchronous operations.

Shared State

Concurrent tasks interact by reading and writing shared and mutable objects in memory. It is complex as you need to implement locking mechanisms to coordinate the threads.

Let's imagine that threads A and B use the same code to share mutable objects. This code, which allows multiple threads to access it simultaneously in a safe manner, is called "thread-safe".

There are four strategies we'll look at: confinement, immutability, thread-safe data type and synchronization.

Message Passing

Concurrent tasks interact by sending messages to each other (1:1 or N:1) through a communication channel. Tasks send messages with immutable objects, and incoming messages from each task are queued for handling. They can do this synchronously or asynchronously, depending on whether you wait until you receive the response or not.

Message passing can be implemented at two levels: between threads of the same process (i.e. queues and producer/consumer) or between processes in a network (i.e. using sockets).